Instagram has recently introduced a new feature called “limits” which allows users to have more control over their interactions on the platform. This feature enables users to hide direct messages and comments from accounts that may be harassing them. The aim is to provide a safer space for users to communicate and engage without fear of being targeted by bullies or bad actors.

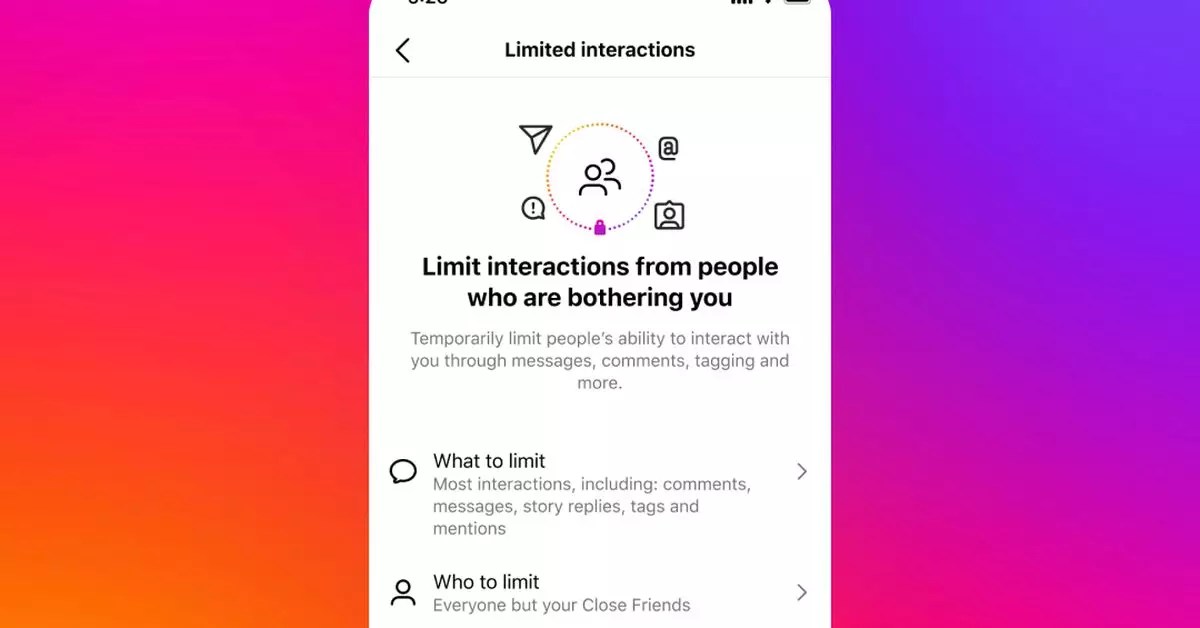

The “limits” feature goes beyond the previous settings that allowed users to hide content from recent followers or accounts that do not follow them. With this new feature, users can now limit interactions to only those on their close friends list. This gives users the ability to create a more personalized and secure environment on Instagram.

When a user enables the “limits” feature, they will only see direct messages, tags, and mentions from people on their close friends list. Accounts that are not on this list can still interact with the user’s posts, but their updates will not be visible to the user. The accounts that have been limited will not be informed that their content has been hidden, giving the user full control over their interactions.

Users have the option to set limits on accounts for up to four weeks at a time, with the ability to extend this duration if necessary. The process of enabling limits is simple and can be done by accessing the profile settings, selecting the limited interactions option, and toggling on the restrictions for accounts that are not following, recent followers, or everyone except close friends.

In addition to the “limits” feature, Instagram has also enhanced its restrict feature to give users more control over their interactions. This feature now allows users to prevent a specific user from tagging or mentioning them, as well as hiding their comments. These updates are part of Instagram’s ongoing efforts to improve user safety and provide a positive online experience for all users.

Instagram’s introduction of these new safety features comes at a time when the platform is facing increased scrutiny from regulators, particularly in the US. The company has taken steps to protect young users by implementing features that prevent adults from messaging minors and by hiding sensitive content related to suicide and eating disorders from teens. These measures are part of Meta’s commitment to creating a safe and welcoming environment for all users on its platforms.

Leave a Reply